-

At the introduction of the new Apple TV, one of the first features demoed was the parallax animation and Siri remote trackpad gesture combo used when navigating the UI. The 1:1 tracking of your thumb to what’s onscreen is great UX.

Apple provides a means for developers to utilize this effect in their applications, but for now it only works with a special type of

UIImage. This is great for movie posters and other static content, but not as useful in other scenarios. When designing Yayy, we felt it was important for GIFs to play directly in the channel browser. We couldn’t use the UIImage approach for our movie players and we wanted to overlay text on these as well, so early versions didn’t include any parallax effect.While it looked great, it didn't feel great. Something was lost when that tactile feedback went away.

We knew we’d need to come up with an approach that combined arbitrary

UIViewhierarchies and a tactile parallax effect. After some fiddling, we arrived at a easy to implement way to achieve a nice feeling parallax effect usingUIPanGestureRecognizerand someUIViewtransforms. The end effect is something like this…

Here's how to do it..

Yayy’s channel browser is a

UICollectionView, and the cells parallax when they are the focused view. Start by defining a protocol forUICollectionViewCellto understand the concept of focus.protocol FocusableCell { func setFocused(focused: Bool, withAnimationCoordinator coordinator: UIFocusAnimationCoordinator) }Then in the

UICollectionViewDelegate, message the appropriate cells on focus/unfocus.override func collectionView(collectionView: UICollectionView, didUpdateFocusInContext context: UICollectionViewFocusUpdateContext, withAnimationCoordinator coordinator: UIFocusAnimationCoordinator) { if let nextIndexPath = context.nextFocusedIndexPath, focusCell = collectionView.cellForItemAtIndexPath(nextIndexPath) as? FocusableCell { focusCell.setFocused(true, withAnimationCoordinator: coordinator) } if let previousIndexPath = context.previouslyFocusedIndexPath, focusCell = collectionView.cellForItemAtIndexPath(previousIndexPath) as? FocusableCell { focusCell.setFocused(false, withAnimationCoordinator: coordinator) } }Each

UICollectionViewCellwill create and manage it’s ownUIPanGestureRecognizer, adding or removing it on focus when appropriate.func setFocused(focused: Bool, withAnimationCoordinator coordinator: UIFocusAnimationCoordinator) { coordinator.addCoordinatedAnimations({ () -> Void in transform = focusedTransform // any other focus-based UI state updating }, completion: nil) if focused { contentView.addGestureRecognizer(panGesture) } else { contentView.removeGestureRecognizer(panGesture) } } private lazy var panGesture: UIPanGestureRecognizer = { let pan = UIPanGestureRecognizer(target: self, action: Selector("viewPanned:") ) pan.cancelsTouchesInView = false return pan }() // The cells zoom when focused. var focusedTransform: CGAffineTransform { return CGAffineTransformMakeScale(1.15, 1.15) }The

UICollectionViewCellwill now be receiving updates as the trackpad is swiped. It’s a matter of updating the view in response to that, using the initial location of theUIPanGestureRecognizeras a reference point. In my testing, I found that the maximum translation a pan would provide, moving from edge to edge of the trackpad, maps to roughly the size of your view in any direction. Multiplying the raw value by a coefficient allows the effect to be tuned. Different views can be given different multipliers to give the illusion of depth (bigger multipliers move more and thus appear closer).var initialPanPosition: CGPoint? func viewPanned(pan: UIPanGestureRecognizer) { switch pan.state { case .Began: initialPanPosition = pan.locationInView(contentView) case .Changed: if let initialPanPosition = initialPanPosition { let currentPosition = pan.locationInView(contentView) let diff = CGPoint( x: currentPosition.x - initialPanPosition.x, y: currentPosition.y - initialPanPosition.y ) let parallaxCoefficientX = 1 / self.view.width * 16 let parallaxCoefficientY = 1 / self.view.height * 16 // Transform is the default focused transform, translated. transform = CGAffineTransformConcat( focusedTransform, CGAffineTransformMakeTranslation( diff.x * parallaxCoefficientX, diff.y * parallaxCoefficientY ) ) let parallaxCoefficientX = 1 / self.view.width * 16 let labelParallaxCoefficientY = 1 / self.view.height * 24 label.transform = CGAffineTransformMakeTranslation( diff.x * labelParallaxCoefficientX, diff.y * labelParallaxCoefficientY ) // Apply to other views as needed. } default: // .Canceled, .Failed, etc.. return the view to it's default state. UIView.animateWithDuration(0.3, delay: 0, usingSpringWithDamping: 0.8, initialSpringVelocity: 0, options: .BeginFromCurrentState, animations: { () -> Void in transform = focusedTransform nameLabel.transform = CGAffineTransformIdentity }, completion: nil) } }The tvOS focus engine handles transitioning between cells, so tweak the coefficients until they feel right while the cell is focused (for instance, Yayy uses a slightly different coefficient for horizontal and vertical movement). Obviously this doesn’t do the tilt and shine as seen in Apple’s implementation. While that wasn’t something we decided to emulate for Yayy, it’s totally doable. MPParallaxView provides an example of how to achieve that visual effect.

As tvOS matures into full fledged app platform, with applications presenting content that increasingly isn’t a catalog of movie posters, it’s vitally important that all tvOS app interfaces (not just images) provide great tactile feedback.

-

Today Skye and I released Yayy, a new app for Apple TV for watching channels of GIFs on your TV.

GIFs on the big screen. Browse dozens of hand-picked channels of hypnotic visuals, cute animals, topical memes, sports highlights, and more. Search to start a channel about anything else. Save the channels you like and make a playlist of your favorite GIFs.

While it’s fun to browse and watch from the couch, Yayy is designed for ambient displays. Think with music at parties, or in the waiting room of a really weird doctor’s office. Pick a channel that fits the mood and give your guests something fun to look at.

Yayy is available now from the Apple TV App Store.

-

As of iOS 9, these are the methods a

UIApplicationDelegatehas to implement to handle all the different notification types iOS might throw at an app.// Sent to the delegate when a running app receives a local notification. application(_:didReceiveLocalNotification:) // Called when your app has been activated because user selected // a custom action from the alert panel of a local notification. application(_:handleActionWithIdentifier: forLocalNotification: completionHandler:) // (New in iOS 9.0) Called when your app has been activated // by the user selecting an action from a local notification. application(_:handleActionWithIdentifier: forLocalNotification: withResponseInfo: completionHandler:) // Called when your app has received a remote notification. application(_:didReceiveRemoteNotification:) // Called when your app has received a remote notification. application(_:didReceiveRemoteNotification: fetchCompletionHandler:) // Tells the app delegate to perform the // custom action specified by a remote notification. application(_:handleActionWithIdentifier: forRemoteNotification: completionHandler:) // (New in iOS 9.0) Tells the app delegate to // perform the custom action specified by a remote notification. application(_:handleActionWithIdentifier: forRemoteNotification: withResponseInfo:completionHandler:)That’s a lot of very similar APIs! Additionally, there are keys for

application(_: didFinishLaunchingWithOptions:)that ALSO pass in notifications (RemoteNotificationKey,LocalNotificationKey). By my count that makes 9 different places to handle notifications. The ins and outs of which method is going to be called for any given interaction is a flow chart that would take up a whiteboard.In an app with a robust notification scheme, missing any of these can cause head-scratching bugs.

Here's my proposal...

Unify remote notifications and

UILocalNotificationnotifications under a singleUINotificationsuperclass. Make all the delegate methods handle the superclass and the delegate can cast to local/remote as needed. Boom. Half the methods. AUIRemoteNotificationclass would be super useful too, it’s still aDictionaryfor Pete’s sake! -

Try this: put on u by Kendrick Lamar on Spotify on your phone, then visit this page talking about how incredible that song is. At the bottom of the page is a Spotify embed of the track, look at it. Really look at it…

It’s syncing the playback position of the embed with the iOS app! Ho-ly shit. Very few people will ever see this, and yet it’s there. That’s attention to detail.

-

The new app Byte is really quite something. It’s a deceptively simple app that lets you construct cards of content (“bytes”) by layering and animating text, images, movies, gifs, beats1, news snippets, or just about anything else you can imagine. The default aesthetic is 90s Trapper Keeper, but there are enough customization options to make almost anything you could think of.

I appreciate that the lack of public profiles forces people to define their own presence on the app. Each byte a unique address, so the trend seems to be to create a profile byte for themselves a their user name. Here’s mine…

Bytes can link to other bytes (in addition to html sites), which gives bytes the flexibility to become many different things. Anyone who has starred a byte gets push notifications when it changes. It has the potential to be an interesting publishing platform. Jenn is using Byte to share egg recipes. Someone is making a Byte version of Oregon Trail. It’s already feeling vibrant in a way that few creative community apps ever do. The way Byte fosters creativity and embraces the early web’s hyperlink and remix culture is a real accomplishment.

-

The emoji sequencer itself would be a great standalone app. ↩

-

-

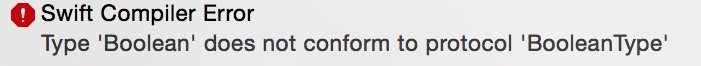

Nope, nothing confusing about the Swift type system whatsoever.